The hardware for long term archival storage is currently dominated by magnetic media, hard disk drives (“HDD”) and, to a lesser extent, magnetic tape. The expansive growth of digital data has triggered new strategies to store vast amounts of data that need to be preserved forever. It is data that is active and in motion deployed in technologies suitable for the ‘hot’ data tier, while data at rest and ready for preservation will move to the long-term ‘cold data’ archive tier. The goal is to maintain expense and performance levels, while reducing long-term risks to data corruption and loss.

In addition to cost containment, the move from “big data” to “long data” through optical storage technology aims to solve the long-term data permanence challenge – especially in the light of a number of conventional storage technologies to choose from when storing replicated data. Archiving is not as easy as keeping old fashioned business records in files or shelves. There is, to begin with, the danger of costly data loss and corruption as data centers migrate previously archived data to new media every five years through a process known as “remastering”. Data center managers seeking cost-containment opportunities should revisit how media acquisition costs have changed from a time, long passed, when hard disk and tape suppliers were able to continuously increase areal density, resulting for a time in sizable annual unit cost reductions in equilibrium with rapidly growing stored volume. Optical storage technology aims to shatter that equilibrium.

Next-Gen Optical Media (NGOM)

The research and development of next-generation optical media is now driving new economics through increased capacity, long-term durability, reduced maintenance, and long media lifetimes up to a century (and longer) – which will rival the total cost of ownership of storage technologies in the face of rapid data growth and preservation requirements. At issue is the assessment of data storage and security in the wake of a changing digital business landscapes, political shifts, and environmental climate change that make data preservation potentially dangerous and cost prohibitive to continuously archive capacity over indefinite periods of time.

To understand the advantage of NGOM, the cost of acquisition has to be annualized when set side-by-side with other options that appear less costly, at least in the short run, especially when periodic migration of archived data to new media, as well as the capacity/cost roadmap are disregarded. On the other hand, though optical discs currently have higher cost per Gigabyte, their advantages become evident when set against the ever-diminishing return over time of competing systems.

Hard disk drives and optical discs- A matter of diminishing returns

Hard Disk Drives (HDD) has served over many decades as a principle means for storing and replicating data, but there is reason to be cautious when we observe the unfolding of Kryder’s prognosis, now enshrined as Kryder’s law, that the density of information we can get on a hard drive is at least as important to enabling new applications as advances in semiconductors. Kryder’s Law says disk storage will keep on getting cheaper.

Yet it is looking like it won’t likely be the case in light of the explosion of archival data and requirements for long retention – especially when it is getting ever more difficult and much more expensive to keep on cramming bits onto disk platters – let alone reading the bits. Kryder’s Law is “slowing down” as HDD approaches the fundamental areal density limit associated with magnetic domains. In fact, the growth in areal density has fallen sharply from over 100% per year to the current 15% per year, especially given the failure so far to overcome the energy barrier to perpendicular writing as the bit size decreases. Of late, only by including multiple platters in a single package allowed HDD technology to increase in capacity and decrease in production costs. Heat-assisted recording technology has been in development for over a decade, and the first HAMR-based hard drives have launched with limited availability. It increases areal density by heating the platter with lasers so that the special platter can magnetically write smaller bits into the comparatively harder material. Mass commercial availability is not expected until 2025.

Magnetic tape: Its strength and hidden costs

While the shelf life of tape is long, up to several decades under special conditions, its useful life and its recognized costs are factored based on the number of accesses, storage environment, and by the lack of backward compatibility of its read/write drives. For tape, backward compatibility of 1-2 generations is expected. That means that if old drives are not kept on hand, only something under 10-year interval may be sustainable for the long term. For enterprises where data growth is sufficiently low and companies are willing to keep old drives on hand for data retrieval, periodical tape migration for long-term storage is the hidden cost factor. Tape migration costs involves making second copies, storing second copies, and the acquisition of new tape media refresh – sometimes every three years to prevent bit-flip, curling or ‘bit rot’.

A lack of random-access is the principal drawback of tape and is a significant issue for active archiving. The optical format can locate files in about 12 seconds, whereas LTO takes upwards of 100 seconds. This time discrepancy can significantly add up when searching for multiple files spread across the archive. If you’re looking for something specific in the archive the tape is going to be trouble, vexation, and cost.

While tape and optical drives consume much less energy, there is in addition the cost of relative resilience to maintaining climate conditions such as air conditioning and humidity control. The hottest and most humid and high temperature regions on earth are located closer to the equator and coastal regions. Cities like Melbourne and Singapore have very high humidity year-round with averages over 70% humidity. Nevertheless, data shows that global warming is pushing extreme temperature changes across the globe.

Optical disks can be stored quite safely between 15- and 131-degrees Fahrenheit, while data tapes start to deteriorate when stored below 61 degrees and above 95 degrees. The problem with that number is that magnetic tapes will only last that long under absolutely optimum environmental conditions. That means you need to keep magnetic tapes in a place where both humidity and temperatures are stable, and within the toleration margins of the technology—or it will cost big time. Depending on the power usage effectiveness of a data center or amount of heat generated by server equipment – or regional temperature zone, the additional amount of wattage used for cooling (vs equipment) can be a value of 2 or more. Reducing the amount of kilowatt hours used for cooling allows for meaningful savings over time.

A major difference between hard disc drives and tape and optical, is that the drive and the media are separated. Hard drives and its storage platters are essentially one unit. You can remove hard drives, but you cannot remove the platters inside of it. Tape and optical drive designs are based on removeable media. Tape drives and optical drives have the media built into a cartridge. Some of the optical media discs are built into a casing, and the physical discs cannot be removed from the cartridge. Unlike cartridges designed by Zerra, individual discs can be inserted and removed from the cartridge itself. The benefit of removeable media is lower cost of physical drives needed for archival.

Solid-state drives and hidden costs

Solid State Drives (SSD) has higher costs than HDD, but its shelf-life is highly compromised by high-volume read-write cycles. When seldom accessed, there is a greatly decreased remaster cycle, as short as the five years allotted to HDD. NAND flash memory can only be written a certain number of times to each block (or cell). Once a block (or cell) is written to its limit, the block starts to forget what is stored and cell decay causes data corruption. Given the high-performance nature of solid-state drives and its cost, SSDs are rarely considered and used as a storage medium for long-term preservation – especially when cost per GB is relatively higher than HDDs. It is common to remaster SSDs every 5 years depending on the uncertainty in its number of accesses. Indeed, it is often recommended that HDD be added to SSD to improve its performance and security.

Think long

As random-access media, optical discs retain the advantages of faster access to data, durability, integrity, and longevity, write-once read many (WORM) media of optical technology also offers added benefits of data permanence since bits are etched into the optical media to provide proof of finality. The lifetime of the media is also more resistant to the number of accesses, moisture, temperature fluctuations, natural or man-made disasters, and electromagnetic pulses.

The cost per GB of optical discs is catching up. Optical disc manufacturers have disc capacities between 100-300 GBs per disc which are encased into a cartridge capacity size of 5.5 Terabytes with the roadmap of these cartridges to reach 10 TB capacity in the next few years. Data capsules of 200 discs with capacity of 20 TBs using 100 GB 2-sided discs are already widely available. Disc capacity and drive performance is increased by increasing the number of layers of the disc and the number laser optical pick heads in the drive. The next evolution of optical storage methods is being developed where the storage medium is made of hard crystal glass and femtosecond lasers etch ‘voxels’ into it. Voxels may be written 100 or more layers deep in a 2mm deep piece of glass by focusing the laser to the desired depth within the glass media. Media lifetimes are expected to increase over millions of years due to the next generation media’s quartz structure. The drawback of this new method is the read and write performance. It took roughly a week to write a 76GB file, and nearly 3 days to read it. Nonetheless, continuous development and commercialization efforts are still ongoing. Until then, optimized disc layers and laser heads will help increase media capacity and contain its related costs.

However, the true cost of long-term storage is not only determined in its capacity per GB, but also the operating budgets and work required to maintain the refresh cycle intervals of moving data in the archival and remastering process. Additionally, a recent study indicated that over 70% of the data stored on expensive primary storage is seldom accessed. Moving and housing rarely accessed data to less expensive random access storage types, such as optical disks, helps to conserve energy, contain, and reserve budget costs for higher performant storage requirements.

Total cost of ownership of data storage

While optical media and libraries does not eliminate the need for migrations, however, using optical media and drives allows IT managers to not only reduce the number of forced migrations as policies and media shelf-life dictates, but optical storage acts as a layer of insurance such that the value of the data in custody is safeguarded, risks are mitigated, and its managers are able to be held accountable for cost containment. In fact, history -so far – has shown that the ongoing maintenance, power and cooling, and its associated costs are the biggest challenges for data storage and its custodians. It isn’t until one breaks down all the indirect costs, support technologies, and outsourced services, you start to get a surface reflection of the complexity and total costs incurred to keep it all continuously under control. Of course, every organization and its purpose, and performance requirements are different, as so does weighing the priorities of these TCO considerations.

TCO considerations

| Costs of additional media capacity per year | Management and administrative staff costs for life of the system | Migration of data to new storage medium and new drives costs |

| Energy, cooling and backup power supply costs | Equipment energy power consumption costs | Storage area networking costs |

| Service provider data transfer/retrieval fees, cloud outsourcing costs | Physical and Cyber security costs | Disaster recovery costs including secondary sites |

| Data center space, floor space, offsite warehousing costs | Software file systems, storage management tools, and licensing costs | Loss of data or loss of business costs |

| Data lifecycle management costs, archival, deduplication, compression, knowledge management costs | Monitoring system, performance management, and virtualization software costs | Costs related to waiting for storage to be provisioned, recovered, system downtime, or brought online for data accessibility |

| Over-provisioning capacity costs | Testing, integration, and installation of new technologies costs | Transportation costs related to data storage forklifts or movement |

| Procurement, finance, and insurance costs | Spare parts and warranty costs |

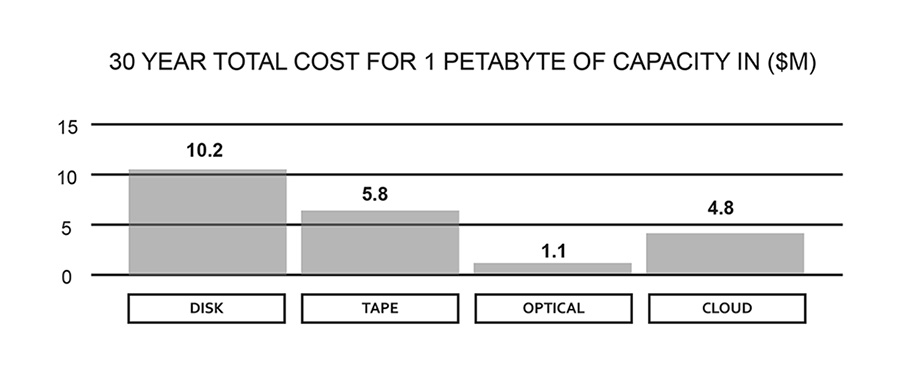

A 2015 study by Hitachi Data Systems estimated and concluded the TCO for different storage types.